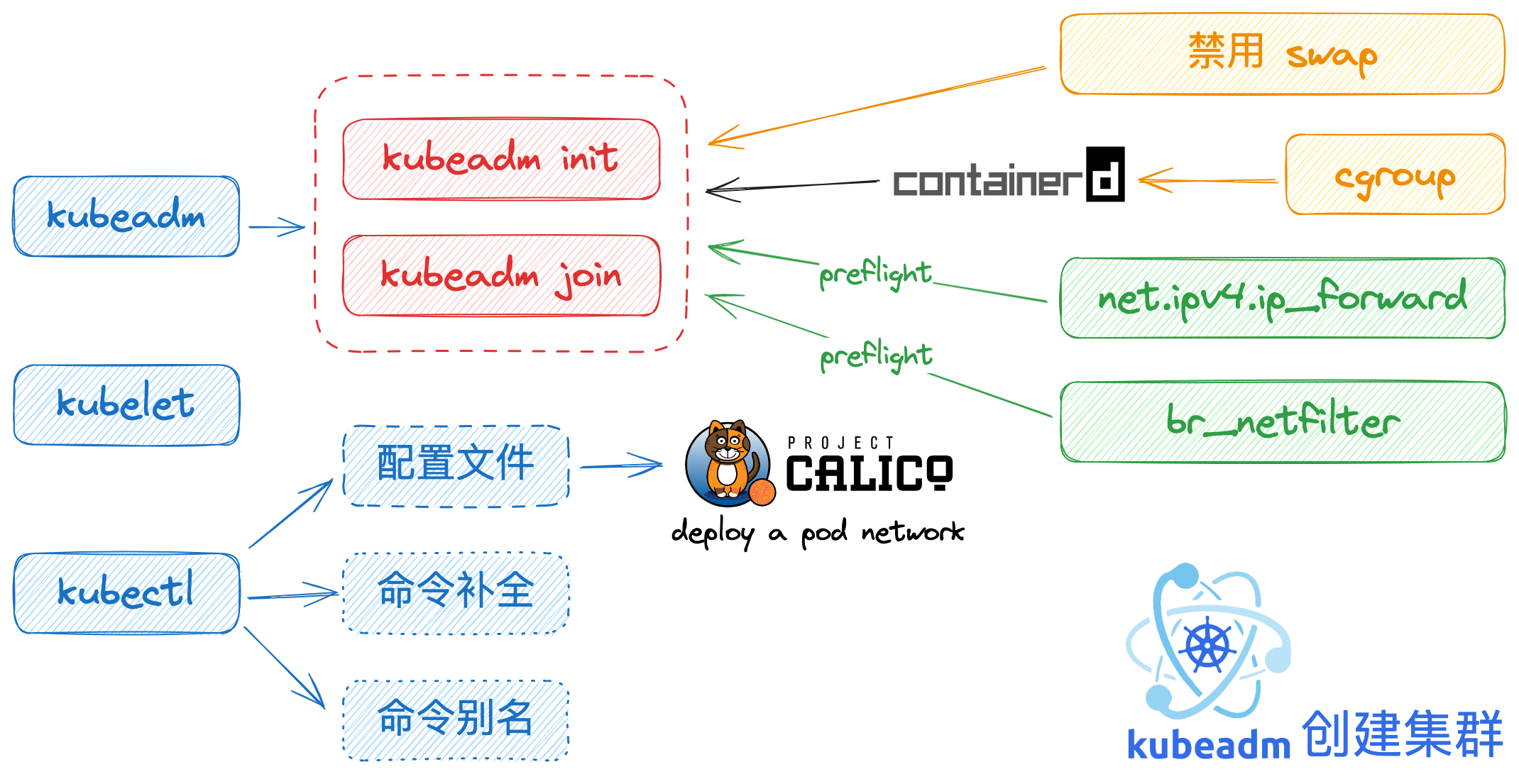

## 准备培训环境

参考: [Kubernetes 文档](https://kubernetes.io/zh/docs/) / [入门](https://kubernetes.io/zh/docs/setup/) / [生产环境](https://kubernetes.io/zh/docs/setup/production-environment/) / [使用部署工具安装 Kubernetes](https://kubernetes.io/zh/docs/setup/production-environment/tools/) / [使用 kubeadm 引导集群](https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/) / [安装 kubeadm](https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/)

## 准备培训环境

参考: [Kubernetes 文档](https://kubernetes.io/zh/docs/) / [入门](https://kubernetes.io/zh/docs/setup/) / [生产环境](https://kubernetes.io/zh/docs/setup/production-environment/) / [使用部署工具安装 Kubernetes](https://kubernetes.io/zh/docs/setup/production-environment/tools/) / [使用 kubeadm 引导集群](https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/) / [安装 kubeadm](https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/)

## B. [准备开始](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#%E5%87%86%E5%A4%87%E5%BC%80%E5%A7%8B)

- 一台兼容的 Linux 主机。Kubernetes 项目为基于 Debian 和 Red Hat 的 Linux 发行版以及一些不提供包管理器的发行版提供通用的指令

- 每台机器 2 GB 或更多的 RAM(如果少于这个数字将会影响你应用的运行内存)

- CPU 2 核心及以上

- 集群中的所有机器的网络彼此均能相互连接(公网和内网都可以)

- 节点之中不可以有重复的主机名、MAC 地址或 product_uuid。请参见[这里](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#verify-mac-address)了解更多详细信息。

- 开启机器上的某些端口。请参见[这里](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#check-required-ports)了解更多详细信息。

- 禁用交换分区。为了保证 kubelet 正常工作,你必须 禁用交换分区

- 例如,`sudo swapoff -a` 将暂时禁用交换分区。要使此更改在重启后保持不变,请确保在如 `/etc/fstab`、`systemd.swap` 等配置文件中禁用交换分区,具体取决于你的系统如何配置

## B. [准备开始](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#%E5%87%86%E5%A4%87%E5%BC%80%E5%A7%8B)

- 一台兼容的 Linux 主机。Kubernetes 项目为基于 Debian 和 Red Hat 的 Linux 发行版以及一些不提供包管理器的发行版提供通用的指令

- 每台机器 2 GB 或更多的 RAM(如果少于这个数字将会影响你应用的运行内存)

- CPU 2 核心及以上

- 集群中的所有机器的网络彼此均能相互连接(公网和内网都可以)

- 节点之中不可以有重复的主机名、MAC 地址或 product_uuid。请参见[这里](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#verify-mac-address)了解更多详细信息。

- 开启机器上的某些端口。请参见[这里](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#check-required-ports)了解更多详细信息。

- 禁用交换分区。为了保证 kubelet 正常工作,你必须 禁用交换分区

- 例如,`sudo swapoff -a` 将暂时禁用交换分区。要使此更改在重启后保持不变,请确保在如 `/etc/fstab`、`systemd.swap` 等配置文件中禁用交换分区,具体取决于你的系统如何配置

## U. [确保每个节点上 MAC 地址和 product_uuid 的唯一性](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#verify-mac-address)

- 你可以使用命令 `ip link` 或 `ifconfig -a` 来获取网络接口的 MAC 地址

- 可以使用 `sudo cat /sys/class/dmi/id/product_uuid` 命令对 product_uuid 校验

一般来讲,硬件设备会拥有唯一的地址,但是有些虚拟机的地址可能会重复。 Kubernetes 使用这些值来唯一确定集群中的节点。 如果这些值在每个节点上不唯一,可能会导致安装[失败](https://github.com/kubernetes/kubeadm/issues/31)

## V.  虚拟机

> 新建... /

> 创建自定虚拟机 /

> Linux / `Ubuntu 64位`

- 设置过程

| ID | 『虚拟机』设置 | 建议配置 | 默认值 | 说明 |

| :--: | :--------: | :--------------------: | :-----: | :----------: |

| 1 | 处理器 | - | 2 | 最低要求 |

| 2 | 内存 | - | 4096 MB | 节约内存 |

| 3 | 显示器 | 取消复选`加速 3D 图形` | 复选 | 节约内存 |

| 4.1 | 网络适配器1 | - | nat | 需上网 |

| 4.2 | 网络适配器2 | - | host only | 固定IP |

| 5 | 硬盘 | `40` GB | 20 GB | 保证练习容量 |

| 6 | 选择固件类型 | UEFI | 传统 BIOS | VMware Fusion 支持嵌套虚拟化 |

虚拟机

> 新建... /

> 创建自定虚拟机 /

> Linux / `Ubuntu 64位`

- 设置过程

| ID | 『虚拟机』设置 | 建议配置 | 默认值 | 说明 |

| :--: | :--------: | :--------------------: | :-----: | :----------: |

| 1 | 处理器 | - | 2 | 最低要求 |

| 2 | 内存 | - | 4096 MB | 节约内存 |

| 3 | 显示器 | 取消复选`加速 3D 图形` | 复选 | 节约内存 |

| 4.1 | 网络适配器1 | - | nat | 需上网 |

| 4.2 | 网络适配器2 | - | host only | 固定IP |

| 5 | 硬盘 | `40` GB | 20 GB | 保证练习容量 |

| 6 | 选择固件类型 | UEFI | 传统 BIOS | VMware Fusion 支持嵌套虚拟化 |

- 设置结果

| ID | HOSTNAME | CPU 核 | RAM | DISK | NIC |

| :--: | :------------------: | :----------: | :------------: | :-------: | :-----: |

| 1 | `k8s-master` | 2 或更多 | 4 GB或更多 | 40 GB | 1. nat

- 设置结果

| ID | HOSTNAME | CPU 核 | RAM | DISK | NIC |

| :--: | :------------------: | :----------: | :------------: | :-------: | :-----: |

| 1 | `k8s-master` | 2 或更多 | 4 GB或更多 | 40 GB | 1. nat

2. host only |

| 2 | `k8s-worker1` | 同上 | 2 GB或更多 | 同上 | 同上 |

| 3 | `k8s-worker2` | 同上 | 同上 | 同上 | 同上 |

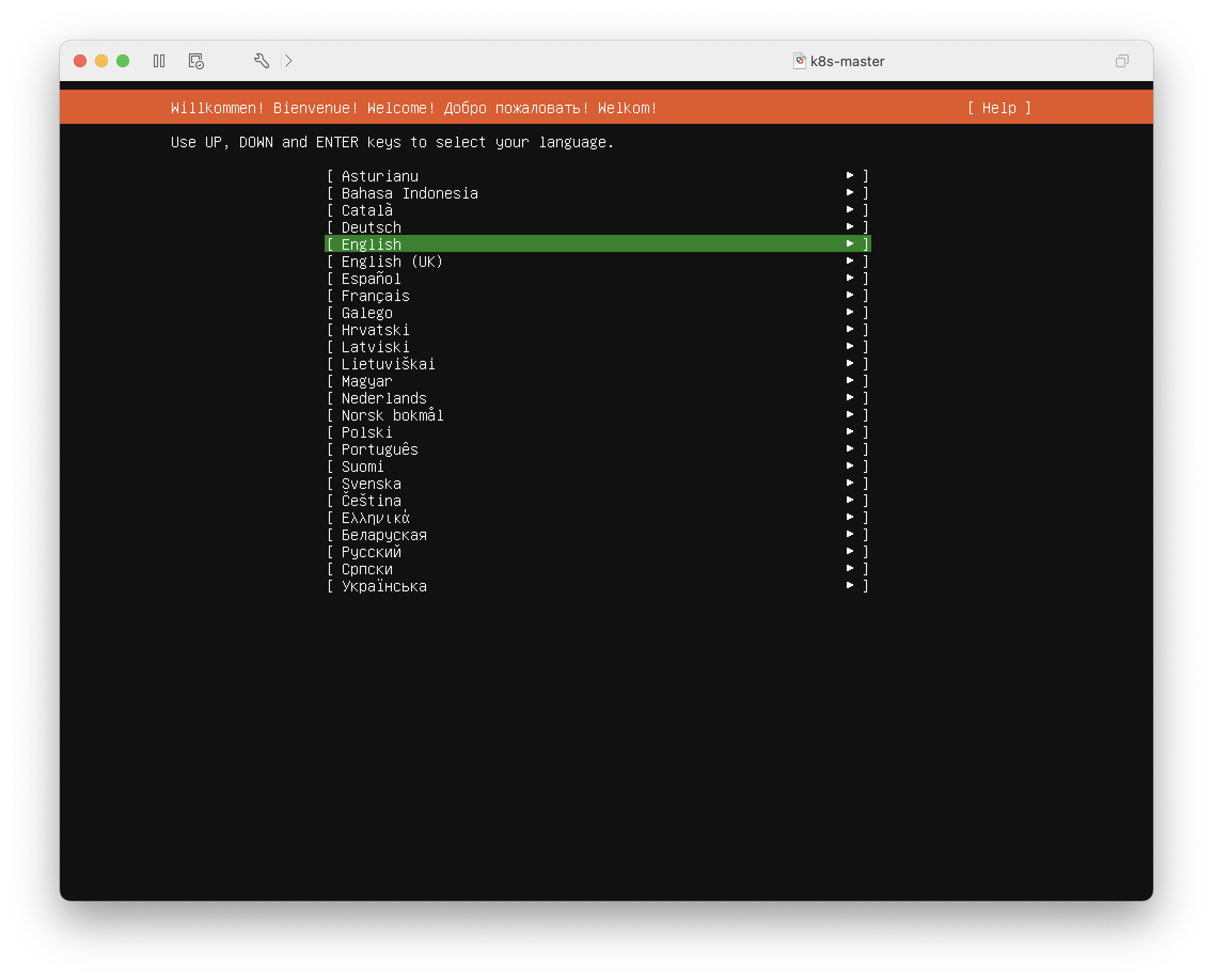

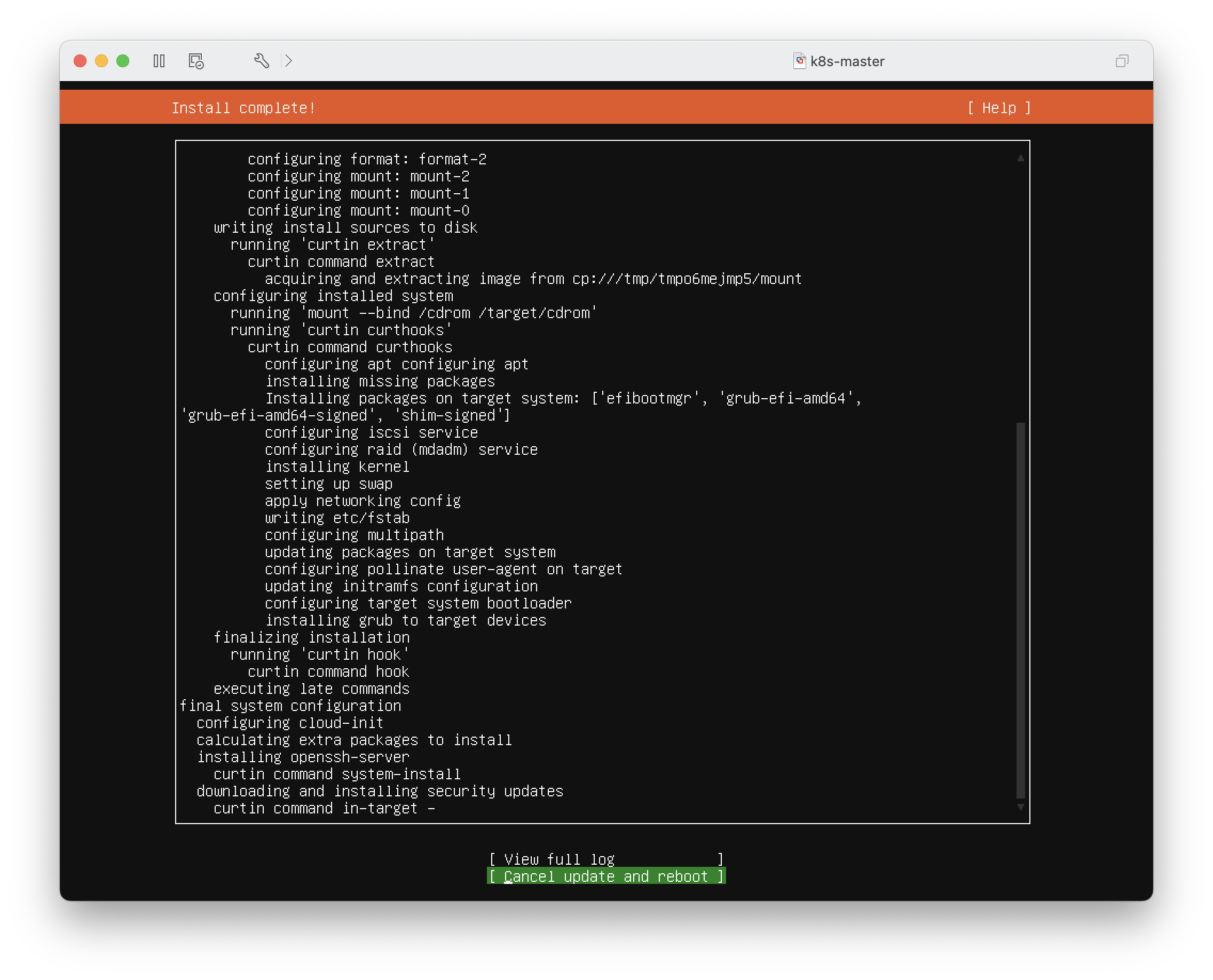

## I. 安装 Ubuntu 20.04 LTS

**x86**

https://mirror.nju.edu.cn/ubuntu-releases/20.04/ubuntu-20.04.6-live-server-amd64.iso

**arm64**

https://cdimage.ubuntu.com/releases/20.04/release/ubuntu-20.04.5-live-server-arm64.iso

https://mirror.nju.edu.cn/ubuntu-cdimage/releases/20.04/release/ubuntu-20.04.5-live-server-arm64.iso

1. Willkommen! Bienvenue! Welcome! Welkom!

[ `English` ]

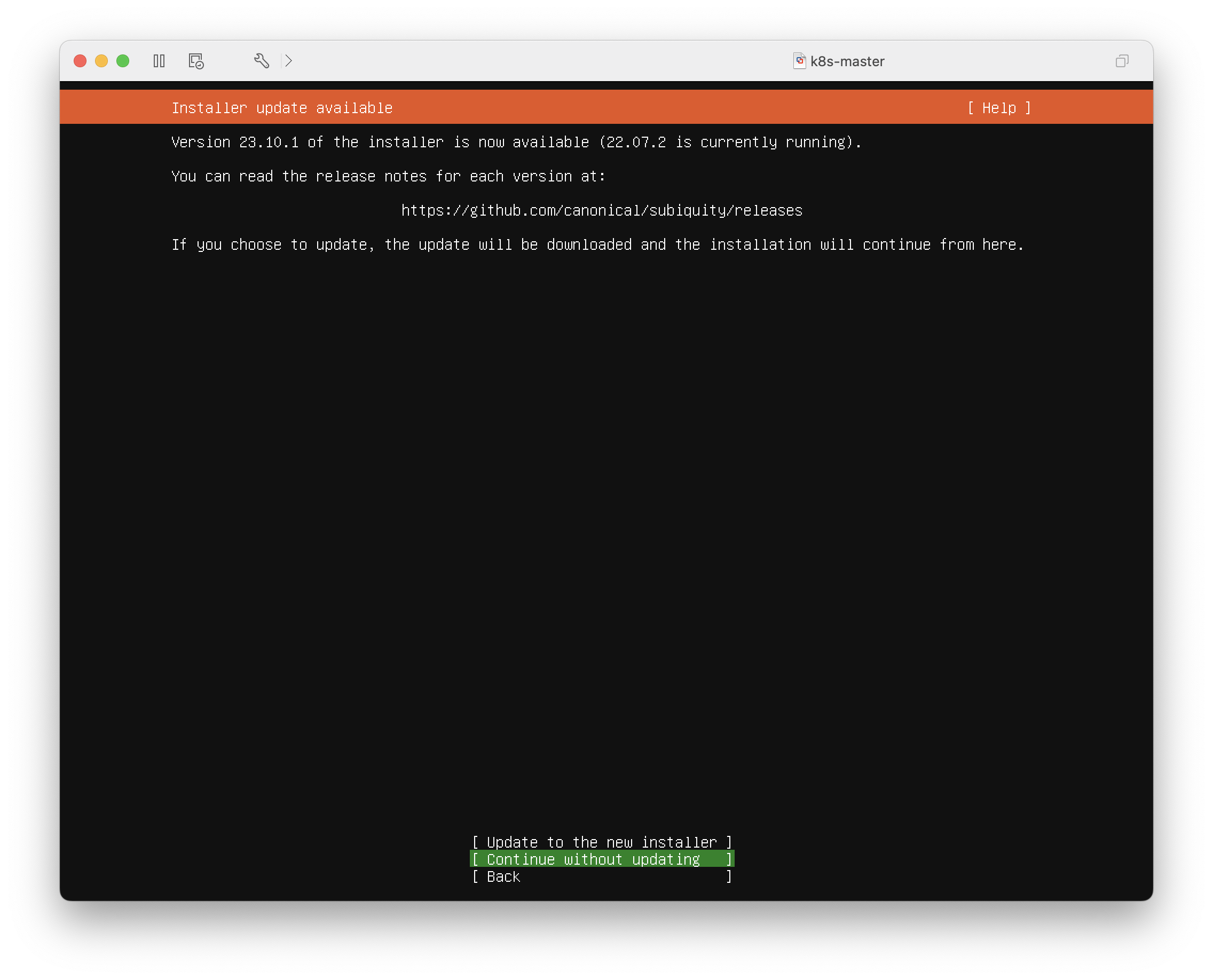

2. Installer update available

[ `Continue without updating` ]

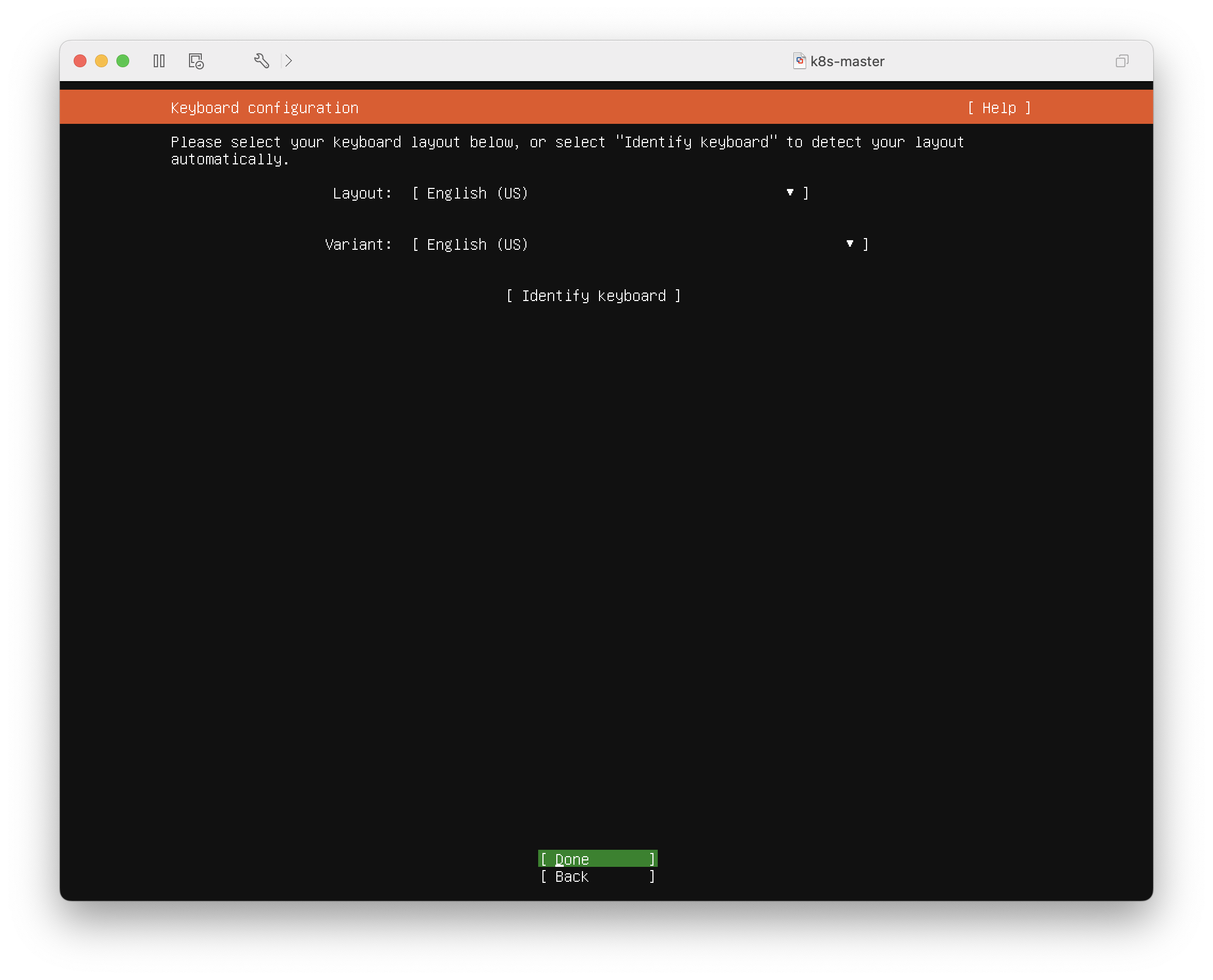

3. Keyboard configuration

[ `Done` ]

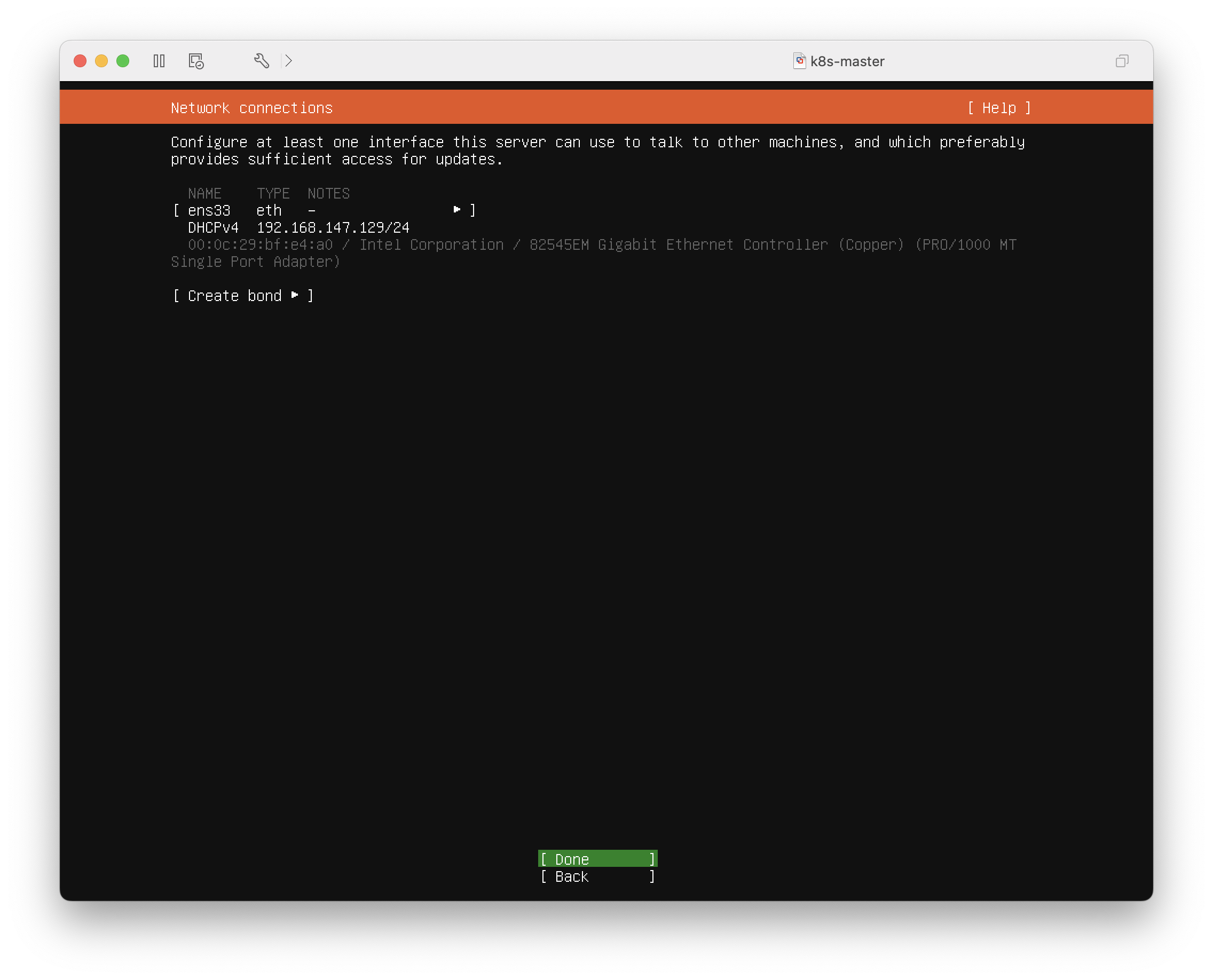

4. Network connections

[ `Done` ]

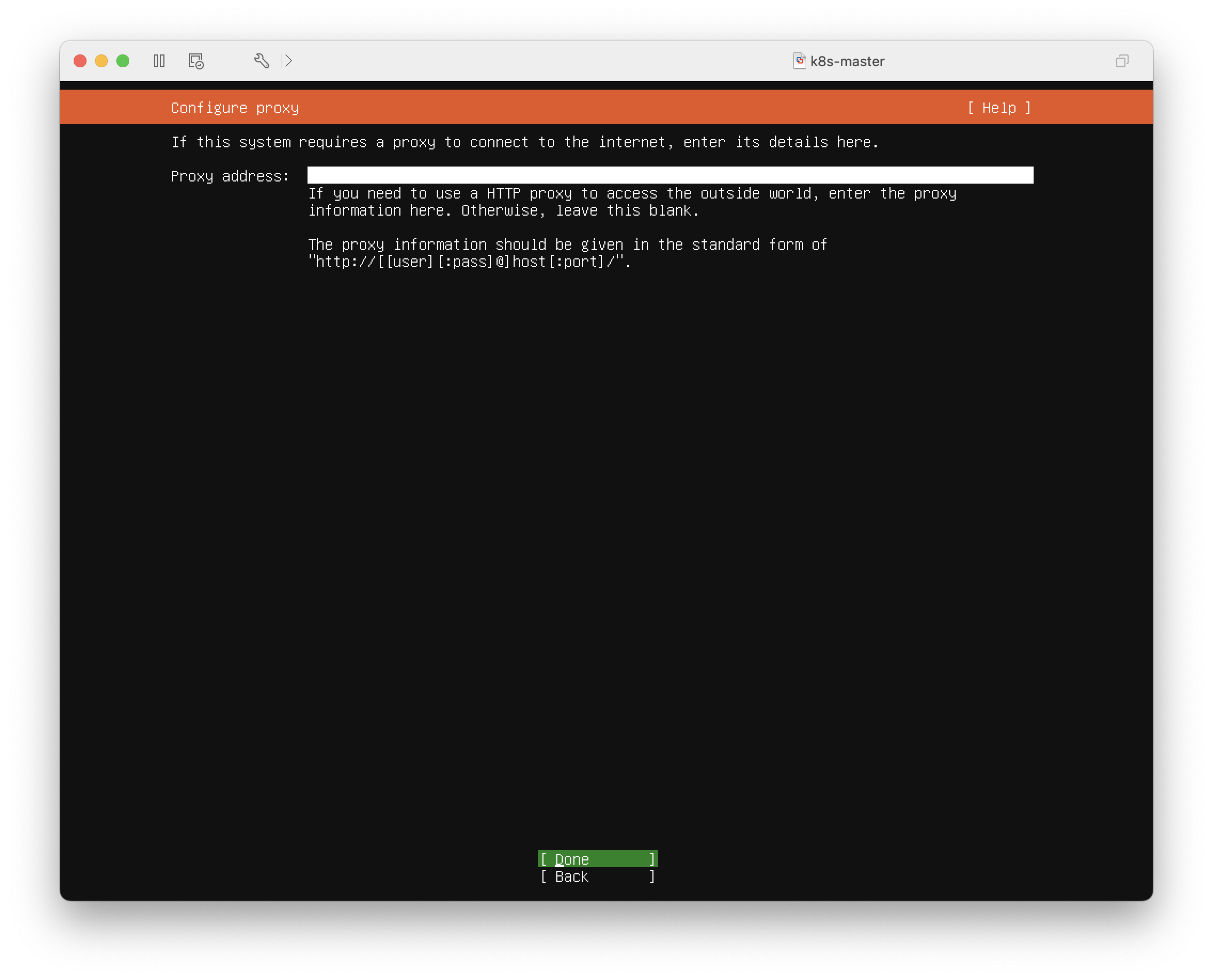

5. Configure proxy

[ `Done` ]

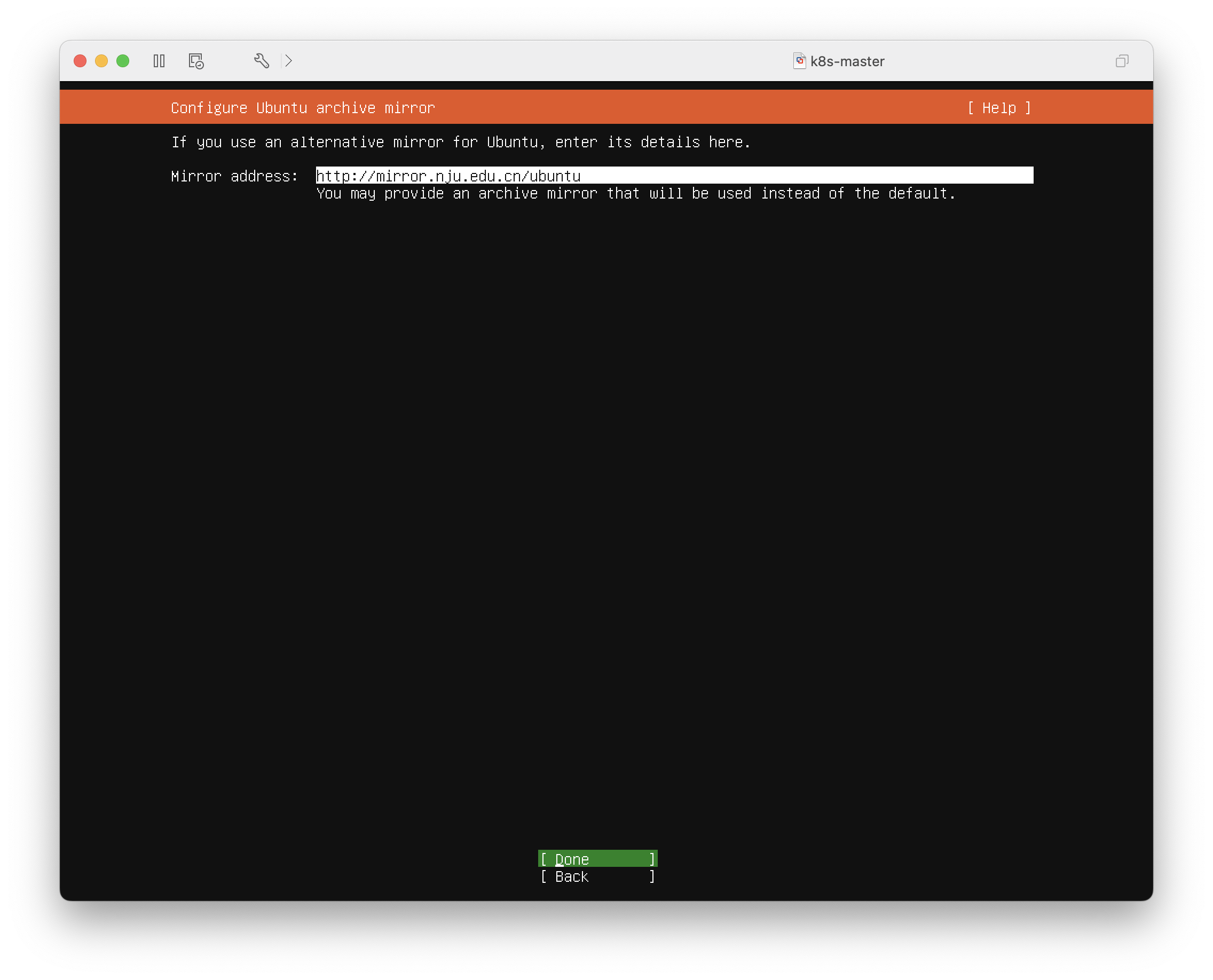

6. Configure Ubuntu archive mirror

- **x86**

Mirror address: http://mirror.nju.edu.cn/ubuntu

/ [ `Done` ]

- **arm64**

Mirror address: http://mirror.nju.edu.cn/ubuntu-ports

/ [ `Done` ]

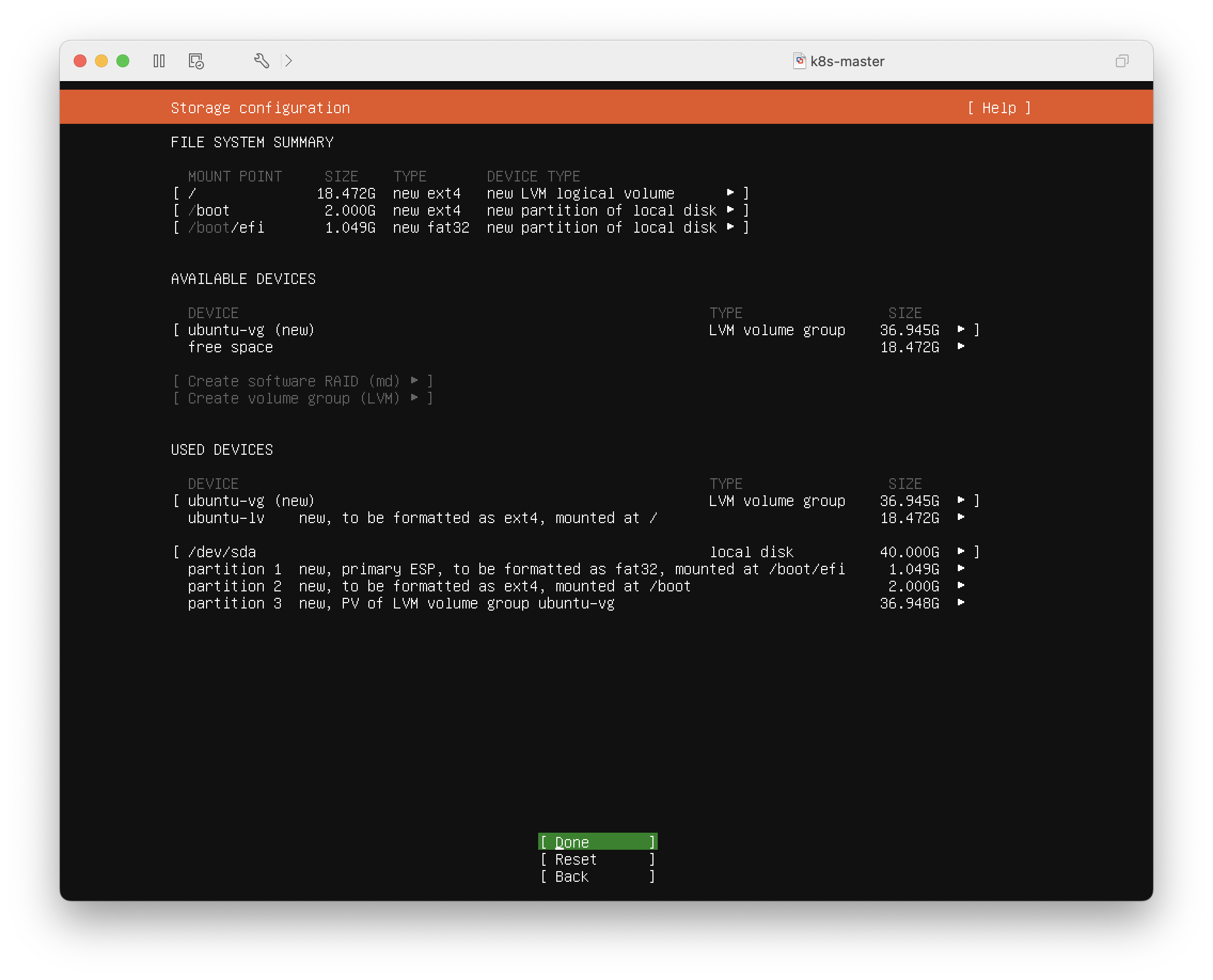

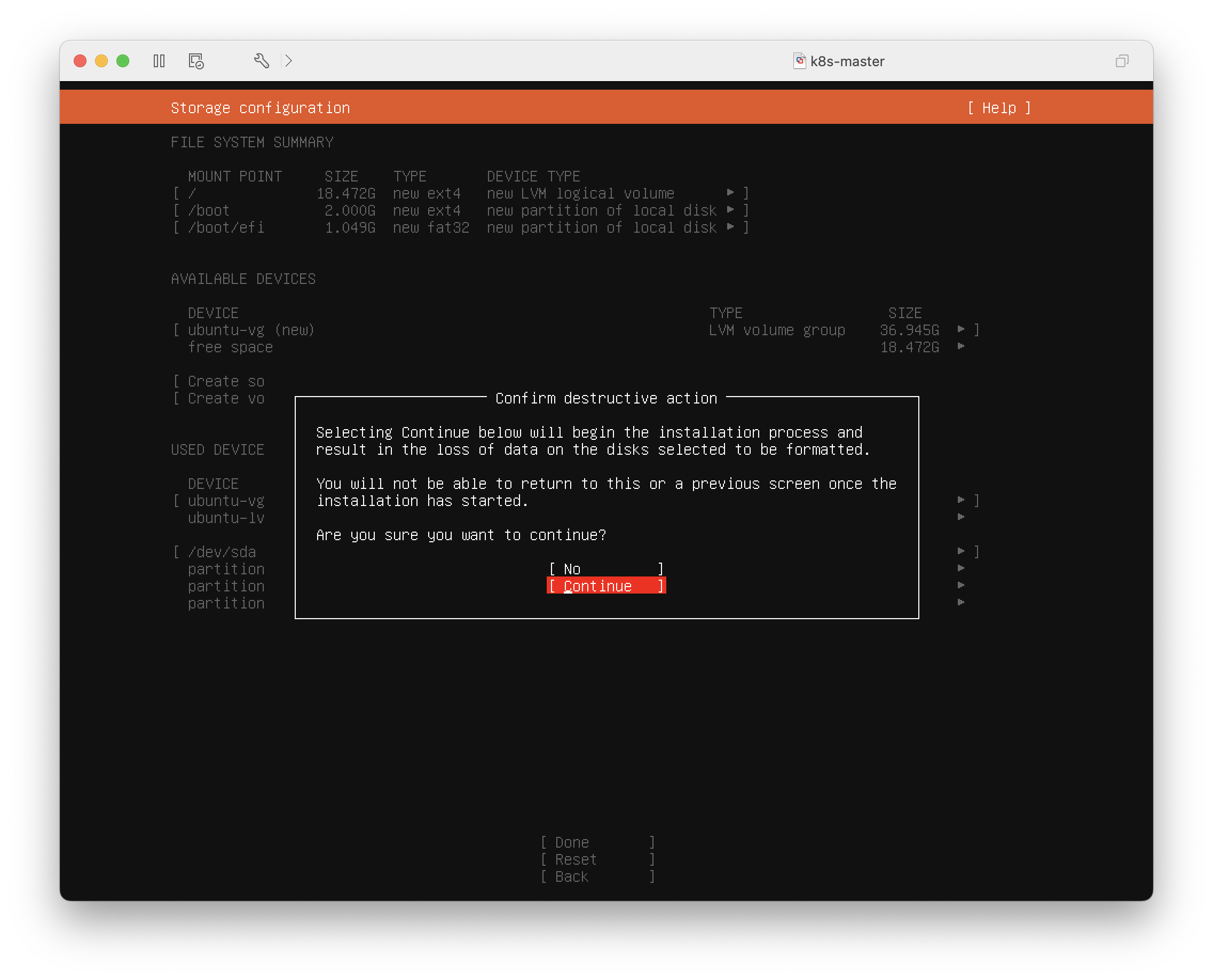

7. Guided storage configuration

[ `Done` ]

8. Storage configuration

[ `Done` ]

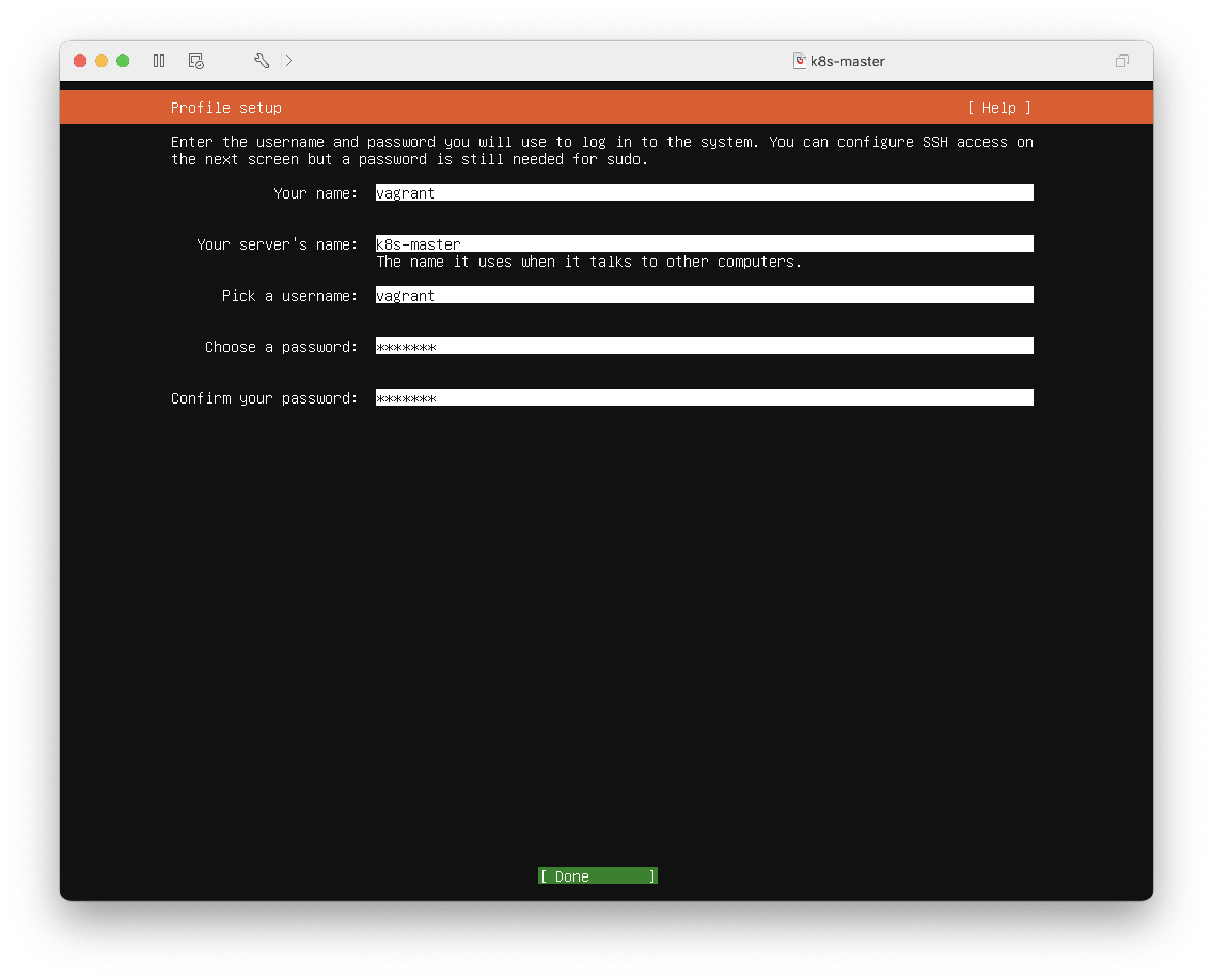

9. Profile setup

Your name: `vagrant`

Your server 's name: `k8s-master`

Pick a username: `vagrant`

Choose a password: `vagrant`

Confirm your password: `vagrant`

/ [ `Done` ]

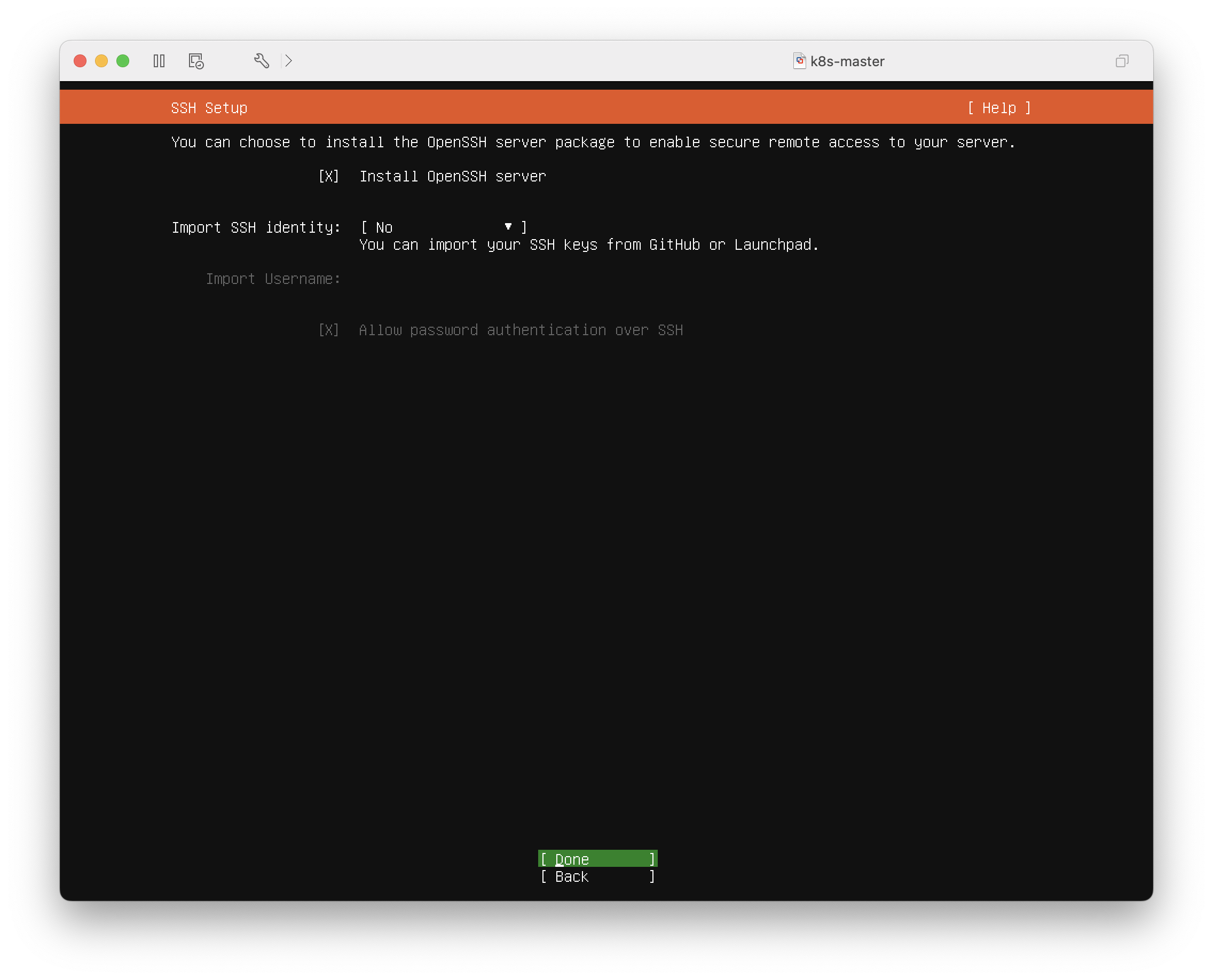

10. SSH Setup

[`X`] Install OpenSSH server

/ [ `Done` ]

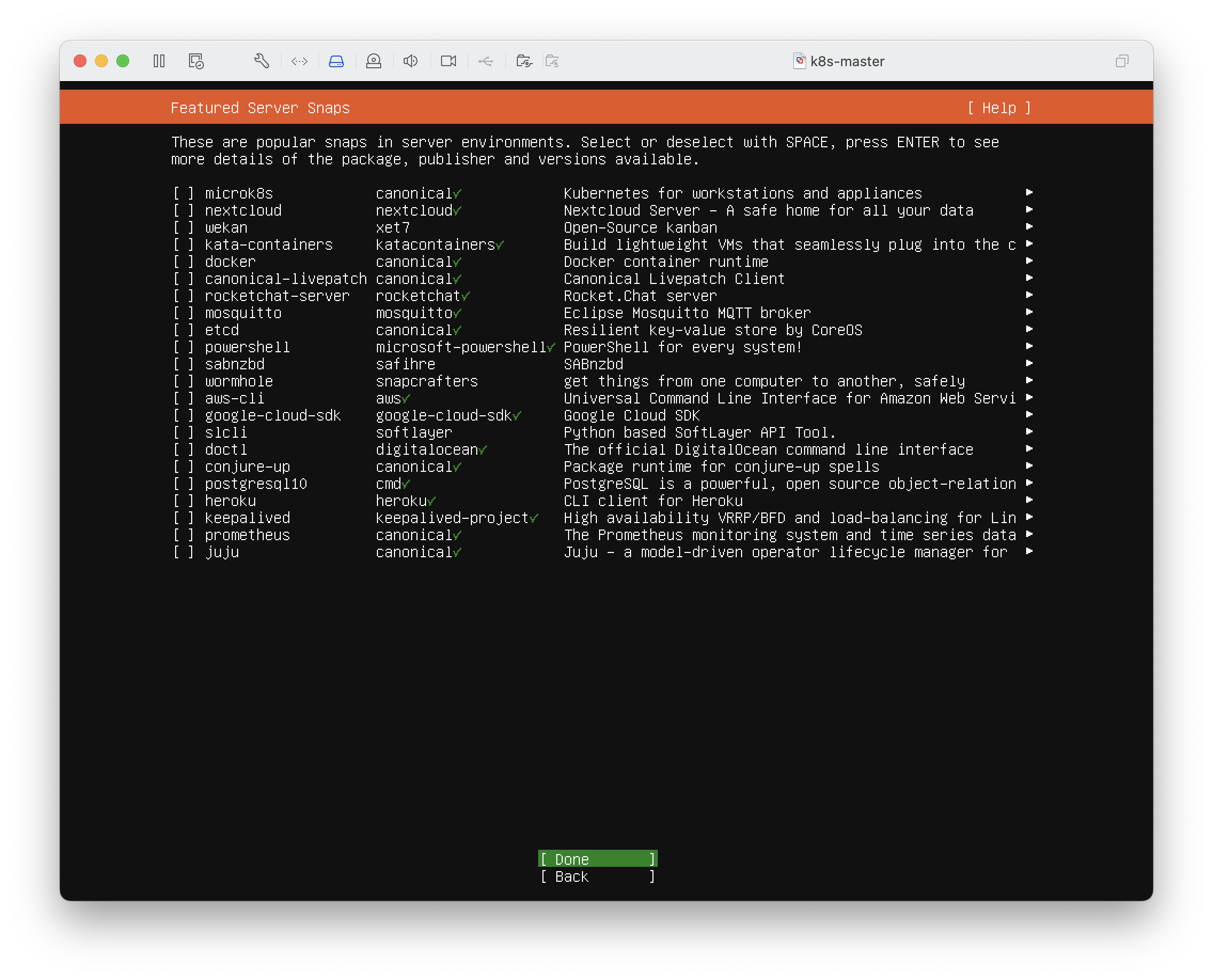

11. Featured Server Snaps

[ `Done` ]

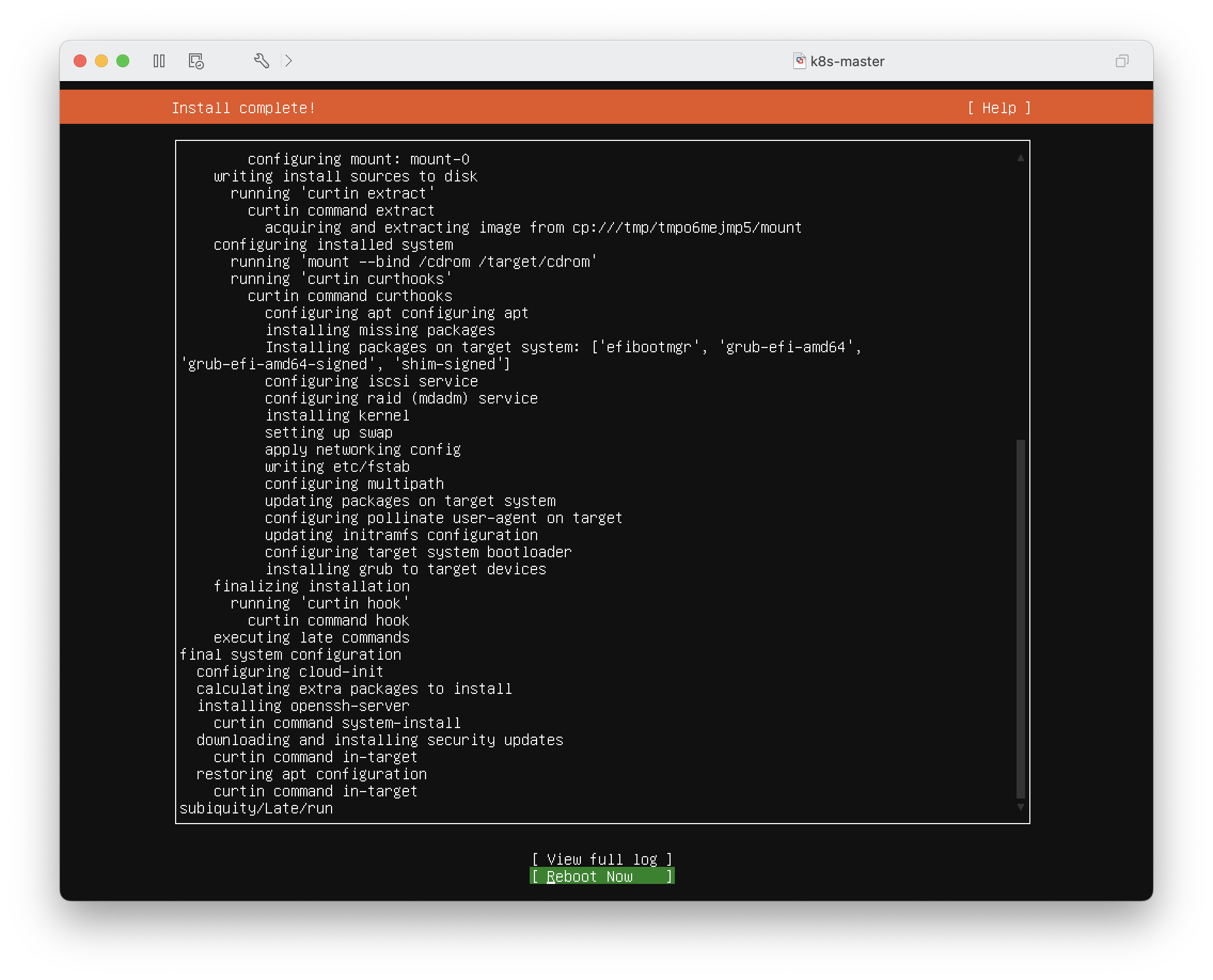

12. Install complete!

[ `Cancel update and reboot` ]

[ `Reboot Now` ]

13. 建议(可选)

关机后,做个快照

## P. 准备工作

### 1. [选做] 设置当前用户 sudo 免密

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

> 不想每次都输入密码 - 加速

```bash

# 当前用户的密码

export USER_PASS=vagrant

# 缓存 sudo 密码 vagrant

echo ${USER_PASS} | sudo -v -S

# 永久生效

sudo tee /etc/sudoers.d/$USER <<-EOF

$USER ALL=(ALL) NOPASSWD: ALL

EOF

sudo cat /etc/sudoers.d/$USER

```

### 2. [选做] 设置 root 密码

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

export USER_PASS=vagrant

(echo ${USER_PASS}; echo ${USER_PASS}) \

| sudo passwd root

sudo sed -i /etc/ssh/sshd_config \

-e '/PasswordAuthentication /{s+#++;s+no+yes+}'

if egrep -q '^(#|)PermitRootLogin' /etc/ssh/sshd_config; then

echo sudo sed -i /etc/ssh/sshd_config \

-e '/^#PermitRootLogin/{s+#++;s+ .*+ yes+}' \

-e '/^PermitRootLogin/{s+#++;s+ .*+ yes+}'

fi

sudo systemctl restart sshd

```

### 3. [选做] 设置时区

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

sudo timedatectl set-timezone Asia/Shanghai

```

### 4. [选做] 扩容

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# 逻辑卷名

export LVN=$(sudo lvdisplay | awk '/Path/ {print $3}')

echo -e " LV Name: \e[1;34m${LVN}\e[0;0m"

# 扩容

sudo lvextend -r -l 100%PVS $LVN

df -h / | egrep [0-9]+G

```

### 5. [选做] 更新软件包,默认接受重启服务  **[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

if [ "$(lsb_release -cs)" = "jammy" ]; then

# Which services should be restarted?

export NFILE=/etc/needrestart/needrestart.conf

sudo sed -i $NFILE \

-e '/nrconf{restart}/{s+i+a+;s+#++}'

grep nrconf{restart} $NFILE

fi

```

### 6. [选做] 使用国内镜像仓库

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# C. 国内

if ! curl --connect-timeout 2 google.com &>/dev/null; then

if [ "$(uname -m)" = "aarch64" ]; then

# arm64

export MIRROR_URL=http://mirror.nju.edu.cn/ubuntu-ports

else

# x86

export MIRROR_URL=http://mirror.nju.edu.cn/ubuntu

fi

# 生成软件仓库源

export CODE_NAME=$(lsb_release -cs)

export COMPONENT="main restricted universe multiverse"

sudo tee /etc/apt/sources.list >/dev/null <<-EOF

deb $MIRROR_URL $CODE_NAME $COMPONENT

deb $MIRROR_URL $CODE_NAME-updates $COMPONENT

deb $MIRROR_URL $CODE_NAME-backports $COMPONENT

deb $MIRROR_URL $CODE_NAME-security $COMPONENT

EOF

fi

cat /etc/apt/sources.list

```

### 7. <必做> 设置静态 IP

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# 获取 IP

export NICP=$(ip a | awk '/inet / {print $2}' | grep -v ^127)

if [ "$(echo ${NICP} | wc -w)" != "1" ]; then

select IP1 in ${NICP}; do

break

done

else

export IP1=${NICP}

fi

```

```bash

# 获取网卡名 - 使用第2块网卡

export NICN=$(ip a | awk '/^3:/ {print $2}' | sed 's/://')

# 获取网关

export NICG=$(ip route | awk '/^default/ {print $3}')

# 获取 DNS

unset DNS; unset DNS1

for i in 114.114.114.114 8.8.4.4 8.8.8.8; do

if nc -w 2 -zn $i 53 &>/dev/null; then

export DNS1=$i

export DNS="$DNS, $DNS1"

fi

done

printf "

addresses: \e[1;34m${IP1}\e[0;0m

ethernets: \e[1;34m${NICN}\e[0;0m

routes: \e[1;34m${NICG}\e[0;0m

nameservers: \e[1;34m${DNS#, }\e[0;0m

"

```

```bash

# 更新 dns

sudo sed -i /etc/systemd/resolved.conf \

-e '/^DNS=/s/=.*/='"$(echo ${DNS#, } | sed 's/,//g')"'/'

sudo systemctl restart systemd-resolved.service

sudo ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf

# 更新网卡配置文件 - 第2块网卡 静态ip

export NYML=/etc/netplan/50-vagrant.yaml

sudo tee ${NYML} < <必做> 安装相关软件

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# - 远程, ssh 免交互, 编辑文件, storageClass

# - Tab 自动补全, nc, ping

sudo apt-get update && \

sudo apt-get -y install \

openssh-server sshpass vim nfs-common \

bash-completion netcat-openbsd iputils-ping

```

### 9. <必做> 禁用 swap

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

## 为了保证 kubelet 正常工作,你必须禁用交换分区

# 取交换文件名称

export SWAPF=$(awk '/swap/ {print $1}' /etc/fstab)

# 立即禁用

sudo swapoff $SWAPF

# 永久禁用

sudo sed -i '/swap/d' /etc/fstab

# 删除交换文件

sudo rm $SWAPF

# 确认

free -h

```

### 10. <必做> 安装运行时

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

## 创建镜像仓库文件

export AFILE=/etc/apt/sources.list.d/docker.list

if ! curl --connect-timeout 2 google.com &>/dev/null; then

# C. 国内

export AURL=http://mirror.nju.edu.cn/docker-ce

else

# A. 国外

export AURL=http://download.docker.com

fi

sudo tee $AFILE >/dev/null <<-EOF

deb $AURL/linux/ubuntu $(lsb_release -cs) stable

EOF

# 导入公钥

# http://download.docker.com/linux/ubuntu/gpg

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg \

| sudo apt-key add -

# W: Key is stored in legacy trusted.gpg keyring

sudo cp /etc/apt/trusted.gpg /etc/apt/trusted.gpg.d

# 安装 containerd

sudo apt-get update && \

sudo apt-get -y install containerd.io

```

```bash

# 生成默认配置文件

containerd config default \

| sed -e '/SystemdCgroup/s+false+true+' \

-e "/sandbox_image/s+3.6+3.9+" \

| sudo tee /etc/containerd/config.toml

if ! curl --connect-timeout 2 google.com &>/dev/null; then

# C. 国内

export M1='[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]'

export M1e='endpoint = ["https://docker.nju.edu.cn"]'

export M2='[plugins."io.containerd.grpc.v1.cri".registry.mirrors."quay.io"]'

export M2e='endpoint = ["https://quay.nju.edu.cn"]'

export M3='[plugins."io.containerd.grpc.v1.cri".registry.mirrors."registry.k8s.io"]'

export M3e='endpoint = ["https://k8s.mirror.nju.edu.cn"]'

sudo sed -i /etc/containerd/config.toml \

-e "/sandbox_image/s+registry.k8s.io+registry.aliyuncs.com/google_containers+" \

-e "/registry.mirrors/a\ $M1" \

-e "/registry.mirrors/a\ $M1e" \

-e "/registry.mirrors/a\ $M2" \

-e "/registry.mirrors/a\ $M2e" \

-e "/registry.mirrors/a\ $M3" \

-e "/registry.mirrors/a\ $M3e"

fi

# 服务重启

sudo systemctl restart containerd

```

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

if [ "$(lsb_release -cs)" = "jammy" ]; then

# Which services should be restarted?

export NFILE=/etc/needrestart/needrestart.conf

sudo sed -i $NFILE \

-e '/nrconf{restart}/{s+i+a+;s+#++}'

grep nrconf{restart} $NFILE

fi

```

### 6. [选做] 使用国内镜像仓库

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# C. 国内

if ! curl --connect-timeout 2 google.com &>/dev/null; then

if [ "$(uname -m)" = "aarch64" ]; then

# arm64

export MIRROR_URL=http://mirror.nju.edu.cn/ubuntu-ports

else

# x86

export MIRROR_URL=http://mirror.nju.edu.cn/ubuntu

fi

# 生成软件仓库源

export CODE_NAME=$(lsb_release -cs)

export COMPONENT="main restricted universe multiverse"

sudo tee /etc/apt/sources.list >/dev/null <<-EOF

deb $MIRROR_URL $CODE_NAME $COMPONENT

deb $MIRROR_URL $CODE_NAME-updates $COMPONENT

deb $MIRROR_URL $CODE_NAME-backports $COMPONENT

deb $MIRROR_URL $CODE_NAME-security $COMPONENT

EOF

fi

cat /etc/apt/sources.list

```

### 7. <必做> 设置静态 IP

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# 获取 IP

export NICP=$(ip a | awk '/inet / {print $2}' | grep -v ^127)

if [ "$(echo ${NICP} | wc -w)" != "1" ]; then

select IP1 in ${NICP}; do

break

done

else

export IP1=${NICP}

fi

```

```bash

# 获取网卡名 - 使用第2块网卡

export NICN=$(ip a | awk '/^3:/ {print $2}' | sed 's/://')

# 获取网关

export NICG=$(ip route | awk '/^default/ {print $3}')

# 获取 DNS

unset DNS; unset DNS1

for i in 114.114.114.114 8.8.4.4 8.8.8.8; do

if nc -w 2 -zn $i 53 &>/dev/null; then

export DNS1=$i

export DNS="$DNS, $DNS1"

fi

done

printf "

addresses: \e[1;34m${IP1}\e[0;0m

ethernets: \e[1;34m${NICN}\e[0;0m

routes: \e[1;34m${NICG}\e[0;0m

nameservers: \e[1;34m${DNS#, }\e[0;0m

"

```

```bash

# 更新 dns

sudo sed -i /etc/systemd/resolved.conf \

-e '/^DNS=/s/=.*/='"$(echo ${DNS#, } | sed 's/,//g')"'/'

sudo systemctl restart systemd-resolved.service

sudo ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf

# 更新网卡配置文件 - 第2块网卡 静态ip

export NYML=/etc/netplan/50-vagrant.yaml

sudo tee ${NYML} < <必做> 安装相关软件

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# - 远程, ssh 免交互, 编辑文件, storageClass

# - Tab 自动补全, nc, ping

sudo apt-get update && \

sudo apt-get -y install \

openssh-server sshpass vim nfs-common \

bash-completion netcat-openbsd iputils-ping

```

### 9. <必做> 禁用 swap

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

## 为了保证 kubelet 正常工作,你必须禁用交换分区

# 取交换文件名称

export SWAPF=$(awk '/swap/ {print $1}' /etc/fstab)

# 立即禁用

sudo swapoff $SWAPF

# 永久禁用

sudo sed -i '/swap/d' /etc/fstab

# 删除交换文件

sudo rm $SWAPF

# 确认

free -h

```

### 10. <必做> 安装运行时

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

## 创建镜像仓库文件

export AFILE=/etc/apt/sources.list.d/docker.list

if ! curl --connect-timeout 2 google.com &>/dev/null; then

# C. 国内

export AURL=http://mirror.nju.edu.cn/docker-ce

else

# A. 国外

export AURL=http://download.docker.com

fi

sudo tee $AFILE >/dev/null <<-EOF

deb $AURL/linux/ubuntu $(lsb_release -cs) stable

EOF

# 导入公钥

# http://download.docker.com/linux/ubuntu/gpg

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg \

| sudo apt-key add -

# W: Key is stored in legacy trusted.gpg keyring

sudo cp /etc/apt/trusted.gpg /etc/apt/trusted.gpg.d

# 安装 containerd

sudo apt-get update && \

sudo apt-get -y install containerd.io

```

```bash

# 生成默认配置文件

containerd config default \

| sed -e '/SystemdCgroup/s+false+true+' \

-e "/sandbox_image/s+3.6+3.9+" \

| sudo tee /etc/containerd/config.toml

if ! curl --connect-timeout 2 google.com &>/dev/null; then

# C. 国内

export M1='[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]'

export M1e='endpoint = ["https://docker.nju.edu.cn"]'

export M2='[plugins."io.containerd.grpc.v1.cri".registry.mirrors."quay.io"]'

export M2e='endpoint = ["https://quay.nju.edu.cn"]'

export M3='[plugins."io.containerd.grpc.v1.cri".registry.mirrors."registry.k8s.io"]'

export M3e='endpoint = ["https://k8s.mirror.nju.edu.cn"]'

sudo sed -i /etc/containerd/config.toml \

-e "/sandbox_image/s+registry.k8s.io+registry.aliyuncs.com/google_containers+" \

-e "/registry.mirrors/a\ $M1" \

-e "/registry.mirrors/a\ $M1e" \

-e "/registry.mirrors/a\ $M2" \

-e "/registry.mirrors/a\ $M2e" \

-e "/registry.mirrors/a\ $M3" \

-e "/registry.mirrors/a\ $M3e"

fi

# 服务重启

sudo systemctl restart containerd

```

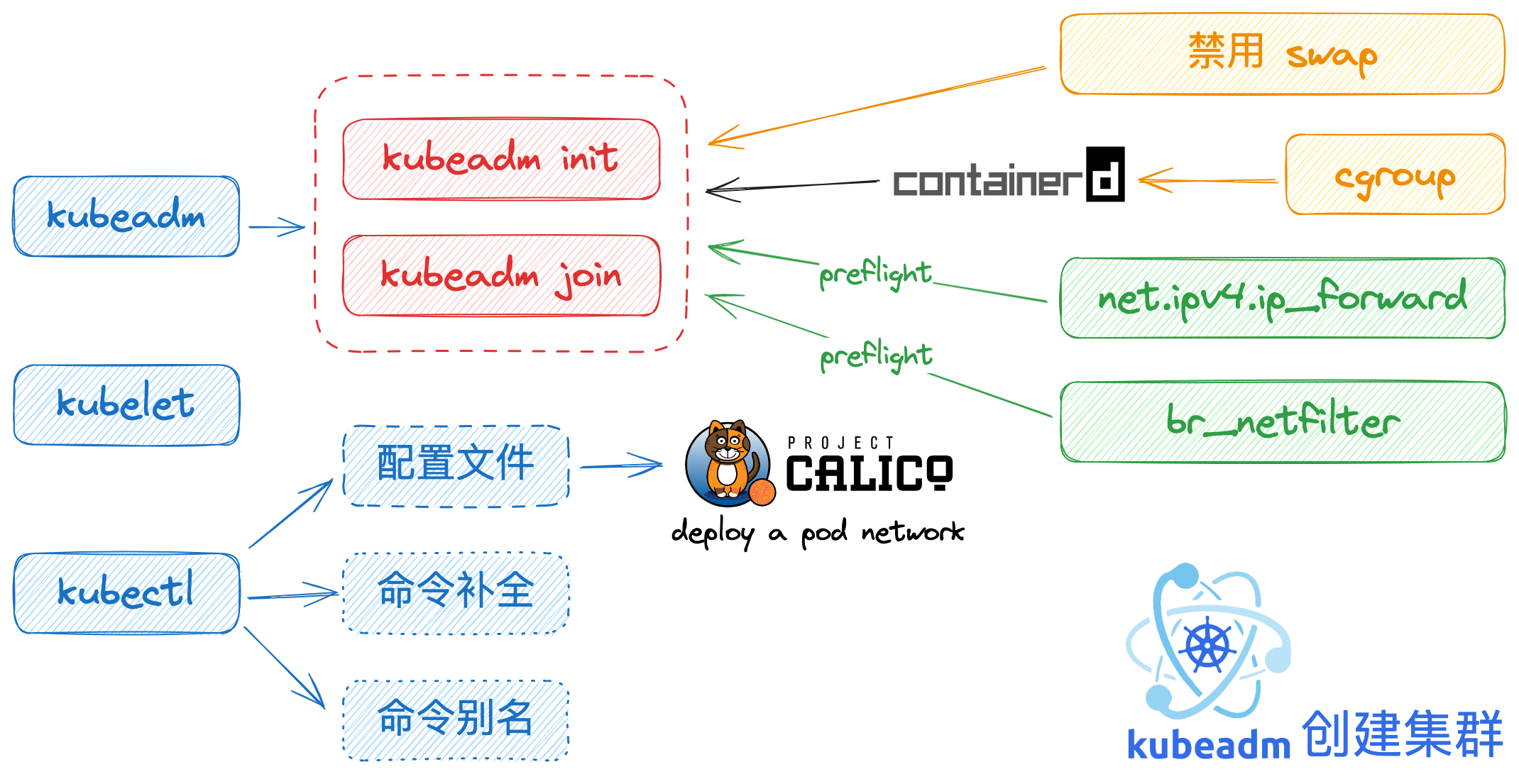

## K. 安装 K8s

### 11. <必做> 安装 kubeadm、kubelet 和 kubectl

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

## 添加 Kubernetes apt 仓库

sudo mkdir /etc/apt/keyrings &>/dev/null

if ! curl --connect-timeout 2 google.com &>/dev/null; then

# C. 国内

export AURL=http://mirrors.aliyun.com/kubernetes-new/core/stable/v1.29/deb

else

# F. 国外

export AURL=http://pkgs.k8s.io/core:/stable:/v1.29/deb

fi

export KFILE=/etc/apt/keyrings/kubernetes-apt-keyring.gpg

curl -fsSL ${AURL}/Release.key \

| sudo gpg --dearmor -o ${KFILE}

sudo tee /etc/apt/sources.list.d/kubernetes.list <<-EOF

deb [signed-by=${KFILE}] ${AURL} /

EOF

sudo apt-get -y update

```

> 官方考试版本-CKA

> https://training.linuxfoundation.cn/certificates/1

>

> 官方考试版本-CKS

> https://training.linuxfoundation.cn/certificates/16

>

> 官方考试版本-CKAD

> https://training.linuxfoundation.cn/certificates/4

```bash

# 列出所有小版本

sudo apt-cache madison kubelet | grep 1.29

# 更新 apt 包索引并安装使用 Kubernetes apt 仓库所需要的包

# 安装 kubelet、kubeadm 和 kubectl 考试版本

sudo apt-get -y install \

apt-transport-https ca-certificates curl gpg \

kubelet=1.29.1-* kubeadm=1.29.1-* kubectl=1.29.1-*

# 锁定版本

sudo apt-mark hold kubelet kubeadm kubectl

```

### 12. [建议] kubeadm 命令补全

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# 立即生效

source <(kubeadm completion bash)

# 永久生效

[ ! -d /home/${LOGNAME}/.kube ] && mkdir /home/${LOGNAME}/.kube

kubeadm completion bash \

| tee /home/${LOGNAME}/.kube/kubeadm_completion.bash.inc \

| sudo tee /root/.kube/kubeadm_completion.bash.inc

echo 'source ~/.kube/kubeadm_completion.bash.inc' \

| tee -a /home/${LOGNAME}/.bashrc \

| sudo tee -a /root/.bashrc

```

### 13. <必做> crictl 命令配置

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# 配置文件

sudo crictl config \

--set runtime-endpoint=unix:///run/containerd/containerd.sock \

--set image-endpoint=unix:///run/containerd/containerd.sock \

--set timeout=10

# crictl_tab

source <(crictl completion bash)

sudo test ! -d /root/.kube && sudo mkdir /root/.kube

crictl completion bash \

| tee /home/${LOGNAME}/.kube/crictl_completion.bash.inc \

| sudo tee /root/.kube/crictl_completion.bash.inc

echo 'source ~/.kube/crictl_completion.bash.inc' \

| tee -a /home/${LOGNAME}/.bashrc \

| sudo tee -a /root/.bashrc

# 注销重新登陆后,生效

sudo usermod -aG root ${LOGNAME}

```

### 14. <必做> kubeadm 命令使用前检查

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

## 1. Bridge

# [ERROR FileContent-.proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

sudo apt-get -y install bridge-utils && \

echo br_netfilter \

| sudo tee /etc/modules-load.d/br.conf && \

sudo modprobe br_netfilter

```

```bash

## 2. 内核支持

# [ERROR FileContent-.proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

sudo tee /etc/sysctl.d/k8s.conf <<-EOF

net.ipv4.ip_forward=1

EOF

# 立即生效

sudo sysctl -p /etc/sysctl.d/k8s.conf

```

### 15. <必做> 配置 主机名

**[vagrant@k8s-master]$**

```bash

sudo hostnamectl set-hostname k8s-master

```

**[vagrant@k8s-worker1]$**

```bash

sudo hostnamectl set-hostname k8s-worker1

```

**[vagrant@k8s-worker2]$**

```bash

sudo hostnamectl set-hostname k8s-worker2

```

### 16. <必做> 编辑 hosts

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# 显示 ip 地址和主机名,方便复制

echo $(hostname -I) $(hostname)

```

```bash

sudo tee -a /etc/hosts >/dev/null < <必做> kubeadm init 初始化

**[vagrant@k8s-master]$**

```bash

export REGISTRY_MIRROR=registry.aliyuncs.com/google_containers

export POD_CIDR=172.16.1.0/16

export SERVICE_CIDR=172.17.1.0/18

if ! curl --connect-timeout 2 google.com &>/dev/null; then

# C. 国内

sudo kubeadm config images pull \

--kubernetes-version 1.29.1 \

--image-repository ${REGISTRY_MIRROR}

sudo kubeadm init \

--kubernetes-version 1.29.1 \

--apiserver-advertise-address=${IP1%/*} \

--pod-network-cidr=${POD_CIDR} \

--service-cidr=${SERVICE_CIDR} \

--node-name=$(hostname -s) \

--image-repository=${REGISTRY_MIRROR}

else

# A. 国外

sudo kubeadm config images pull \

--kubernetes-version 1.29.1

sudo kubeadm init \

--kubernetes-version 1.29.1 \

--apiserver-advertise-address=${IP1%/*} \

--pod-network-cidr=${POD_CIDR} \

--service-cidr=${SERVICE_CIDR} \

--node-name=$(hostname -s)

fi

```

> [init] Using Kubernetes version: v1.29.1

> [preflight] Running pre-flight checks

> [preflight] Pulling images required for setting up a Kubernetes cluster

> [preflight] This might take a minute or two, depending on the speed of your internet connection

> [preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

> W0612 04:18:15.295414 10552 images.go:80] could not find officially supported version of etcd for Kubernetes v1.29.1, falling back to the nearest etcd version (3.5.7-0)

> W0612 04:18:30.785239 10552 checks.go:835] detected that the sandbox image "registry.aliyuncs.com/google_containers/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image.

> [certs] Using certificateDir folder "/etc/kubernetes/pki"

> [certs] Generating "ca" certificate and key

> [certs] Generating "apiserver" certificate and key

> [certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.8.3]

> [certs] Generating "apiserver-kubelet-client" certificate and key

> [certs] Generating "front-proxy-ca" certificate and key

> [certs] Generating "front-proxy-client" certificate and key

> [certs] Generating "etcd/ca" certificate and key

> [certs] Generating "etcd/server" certificate and key

> [certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.8.3 127.0.0.1 ::1]

> [certs] Generating "etcd/peer" certificate and key

> [certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.8.3 127.0.0.1 ::1]

> [certs] Generating "etcd/healthcheck-client" certificate and key

> [certs] Generating "apiserver-etcd-client" certificate and key

> [certs] Generating "sa" key and public key

> [kubeconfig] Using kubeconfig folder "/etc/kubernetes"

> [kubeconfig] Writing "admin.conf" kubeconfig file

> [kubeconfig] Writing "kubelet.conf" kubeconfig file

> [kubeconfig] Writing "controller-manager.conf" kubeconfig file

> [kubeconfig] Writing "scheduler.conf" kubeconfig file

> [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

> [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

> [kubelet-start] Starting the kubelet

> [control-plane] Using manifest folder "/etc/kubernetes/manifests"

> [control-plane] Creating static Pod manifest for "kube-apiserver"

> [control-plane] Creating static Pod manifest for "kube-controller-manager"

> [control-plane] Creating static Pod manifest for "kube-scheduler"

> [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

> W0612 04:18:33.894812 10552 images.go:80] could not find officially supported version of etcd for Kubernetes v1.29.1, falling back to the nearest etcd version (3.5.7-0)

> [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

> [apiclient] All control plane components are healthy after 6.502471 seconds

> [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

> [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

> [upload-certs] Skipping phase. Please see --upload-certs

> [mark-control-plane] Marking the node k8s-master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

> [mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

> [bootstrap-token] Using token: abcdef.0123456789abcdef

> [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

> [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

> [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

> [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

> [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

> [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

> [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

> [addons] Applied essential addon: CoreDNS

> [addons] Applied essential addon: kube-proxy

>

> Your Kubernetes control-plane has initialized successfully!

>

> To start using your cluster, you need to run the following as a regular user:

>

> ```bash

> mkdir -p $HOME/.kube

> sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

> sudo chown $(id -u):$(id -g) $HOME/.kube/config

> ```

>

> Alternatively, if you are the root user, you can run:

>

> ```bash

> export KUBECONFIG=/etc/kubernetes/admin.conf

> ```

>

> You should now deploy a pod network to the cluster.

> Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

> https://kubernetes.io/docs/concepts/cluster-administration/addons/

>

> Then you can join any number of worker nodes by running the following on each as root:

>

> ```bash

> kubeadm join 192.168.8.3:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:49ff7a97c017153baee67c8f44fa84155b8cf06ca8cee067f766ec252cb8d1ac

> ```

### 18. <必做> kubeadm join 加入集群

**[vagrant@k8s-worker1 | k8s-worker2]$**

```bash

sudo \

kubeadm join 192.168.8.3:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:49ff7a97c017153baee67c8f44fa84155b8cf06ca8cee067f766ec252cb8d1ac

```

### 19. <必做> Client - kubeconfig 配置文件

**[vagrant@k8s-master]$**

```bash

# - vagrant

sudo cat /etc/kubernetes/admin.conf \

| tee /home/${LOGNAME}/.kube/config

# - root

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" \

| sudo tee -a /root/.bashrc

```

### 20. <必做> 创建网络

**[vagrant@k8s-master]$**

> 参考 init 的输出提示

>

> - https://www.tigera.io/project-calico/

> Home -> Calico Open Source

> Install -> CalicoKubernetesSelf-managed on-premises

> Install Calico -> Manifest -> Install Calico with Kubernetes API datastore, 50 nodes or less

>

> ```bash

> curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/calico.yaml -O

> ```

```bash

# 1. URL

if ! curl --connect-timeout 2 google.com &>/dev/null; then

# C. 国内

export CURL=http://k8s.ruitong.cn:8080/K8s

else

# A. 国外

export CURL=https://raw.githubusercontent.com/projectcalico

fi

# 2. FILE

export CFILE=calico/v3.26.4/manifests/calico.yaml

# 3. INSTALL

kubectl apply -f ${CURL}/${CFILE}

```

### 21. [建议] Client - kubectl 命令补全

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

> 查看帮助

>

> ```bash

> $ kubectl completion --help

> ```

```bash

# 立即生效

source <(kubectl completion bash)

# 永久生效

kubectl completion bash \

| tee /home/${LOGNAME}/.kube/completion.bash.inc \

| sudo tee /root/.kube/completion.bash.inc

echo 'source ~/.kube/completion.bash.inc' \

| tee -a /home/${LOGNAME}/.bashrc \

| sudo tee -a /root/.bashrc

```

### 22. [建议] Client - kubectl 命令别名

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

> 参考网址 https://kubernetes.io/zh-cn/docs/reference/kubectl/cheatsheet/

```bash

# 立即生效

alias k='kubectl'

complete -F __start_kubectl k

# 永久生效

cat <<-EOF \

| tee -a /home/${LOGNAME}/.bashrc \

| sudo tee -a /root/.bashrc

alias k='kubectl'

complete -F __start_kubectl k

EOF

```

## C. 确认环境正常

**[vagrant@k8s-master]**

```bash

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 3m42s v1.29.1

k8s-worker1 Ready 60s v1.29.1

k8s-worker2 Ready 54s v1.29.1

$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-786b679988-jqmtn 1/1 Running 0 2m58s

kube-system calico-node-4sbwf 1/1 Running 0 71s

kube-system calico-node-cfq42 1/1 Running 0 65s

kube-system calico-node-xgmqv 1/1 Running 0 2m58s

kube-system coredns-7bdc4cb885-grj7m 1/1 Running 0 3m46s

kube-system coredns-7bdc4cb885-hjdkp 1/1 Running 0 3m46s

kube-system etcd-k8s-master 1/1 Running 0 3m50s

kube-system kube-apiserver-k8s-master 1/1 Running 0 3m50s

kube-system kube-controller-manager-k8s-master 1/1 Running 0 3m50s

kube-system kube-proxy-2cgvn 1/1 Running 0 3m46s

kube-system kube-proxy-2zh5k 1/1 Running 0 65s

kube-system kube-proxy-nd5z8 1/1 Running 0 71s

kube-system kube-scheduler-k8s-master 1/1 Running 0 3m50s

$ kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true","reason":""}

controller-manager Healthy ok

scheduler Healthy ok

```

## A. 附录

### A1. 新节点加入 K8s 集群命令

> - 查看 kubeadm init 命令的输出

> - 使用 kubeadm token create 命令重新创建

**[vagrant@k8s-master]$**

```bash

kubeadm token create --print-join-command

```

### A2. kubeadm reset 重置环境

> 还原通过==kubeadm init==或==kubeadm join==对此主机所做的更改

**[vagrant@k8s-master]$**

```bash

echo y | sudo kubeadm reset

```

### A3 . kubectl Err1

> The connection to the server localhost:8080 was refused - did you specify the right host or port?

**[vagrant@k8s-worker2]$**

```bash

# 创建目录

mkdir ~/.kube

# 拷贝配置文件

scp root@k8s-master:/etc/kubernetes/admin.conf \

~/.kube/config

# 验证

kubectl get node

```

### A4. RHEL布署

> - 关闭 firewalld

> - 关闭 SELinux

```bash

## firewalld

systemctl disable --now firewalld

## SELinux

sed -i '/^SELINUX=/s/=.*/=disabled/' \

/etc/selinux/config

# 重启生效

reboot

```

### A5. Client - Windows

> 网址 https://kubernetes.io/zh-cn/docs/tasks/tools/install-kubectl-windows/

>

> ```powershell

> curl.exe -LO "https://dl.k8s.io/release/v1.29.1/bin/windows/amd64/kubectl.exe"

> ```

- 命令

```powershell

# 查看外部命令路径

Get-ChildItem Env:

$Env:PATH

# 下载

$Env:FURL = "http://k8s.ruitong.cn:8080/K8s/kubectl.exe"

curl.exe -L# $Env:FURL -o $Env:LOCALAPPDATA\Microsoft\WindowsApps\kubectl.exe

```

- 配置文件

```powershell

# 创建文件夹

New-Item -Path $ENV:USERPROFILE -Name .kube -type directory

# 拷贝配置文件

scp vagrant@192.168.8.3:/home/vagrant/.kube/config $ENV:USERPROFILE\.kube\config

Password: `vagrant`

# 验证

kubectl get node

```

- 自动补全

> ```bash

> kubectl.exe completion -h

> ```

```bash

# 立即生效

kubectl completion powershell | Out-String | Invoke-Expression

# 永久生效

kubectl completion powershell > $ENV:USERPROFILE\.kube\completion.ps1

```

## 准备培训环境

参考: [Kubernetes 文档](https://kubernetes.io/zh/docs/) / [入门](https://kubernetes.io/zh/docs/setup/) / [生产环境](https://kubernetes.io/zh/docs/setup/production-environment/) / [使用部署工具安装 Kubernetes](https://kubernetes.io/zh/docs/setup/production-environment/tools/) / [使用 kubeadm 引导集群](https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/) / [安装 kubeadm](https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/)

## 准备培训环境

参考: [Kubernetes 文档](https://kubernetes.io/zh/docs/) / [入门](https://kubernetes.io/zh/docs/setup/) / [生产环境](https://kubernetes.io/zh/docs/setup/production-environment/) / [使用部署工具安装 Kubernetes](https://kubernetes.io/zh/docs/setup/production-environment/tools/) / [使用 kubeadm 引导集群](https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/) / [安装 kubeadm](https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/)

## B. [准备开始](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#%E5%87%86%E5%A4%87%E5%BC%80%E5%A7%8B)

- 一台兼容的 Linux 主机。Kubernetes 项目为基于 Debian 和 Red Hat 的 Linux 发行版以及一些不提供包管理器的发行版提供通用的指令

- 每台机器 2 GB 或更多的 RAM(如果少于这个数字将会影响你应用的运行内存)

- CPU 2 核心及以上

- 集群中的所有机器的网络彼此均能相互连接(公网和内网都可以)

- 节点之中不可以有重复的主机名、MAC 地址或 product_uuid。请参见[这里](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#verify-mac-address)了解更多详细信息。

- 开启机器上的某些端口。请参见[这里](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#check-required-ports)了解更多详细信息。

- 禁用交换分区。为了保证 kubelet 正常工作,你必须 禁用交换分区

- 例如,`sudo swapoff -a` 将暂时禁用交换分区。要使此更改在重启后保持不变,请确保在如 `/etc/fstab`、`systemd.swap` 等配置文件中禁用交换分区,具体取决于你的系统如何配置

## B. [准备开始](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#%E5%87%86%E5%A4%87%E5%BC%80%E5%A7%8B)

- 一台兼容的 Linux 主机。Kubernetes 项目为基于 Debian 和 Red Hat 的 Linux 发行版以及一些不提供包管理器的发行版提供通用的指令

- 每台机器 2 GB 或更多的 RAM(如果少于这个数字将会影响你应用的运行内存)

- CPU 2 核心及以上

- 集群中的所有机器的网络彼此均能相互连接(公网和内网都可以)

- 节点之中不可以有重复的主机名、MAC 地址或 product_uuid。请参见[这里](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#verify-mac-address)了解更多详细信息。

- 开启机器上的某些端口。请参见[这里](https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#check-required-ports)了解更多详细信息。

- 禁用交换分区。为了保证 kubelet 正常工作,你必须 禁用交换分区

- 例如,`sudo swapoff -a` 将暂时禁用交换分区。要使此更改在重启后保持不变,请确保在如 `/etc/fstab`、`systemd.swap` 等配置文件中禁用交换分区,具体取决于你的系统如何配置

虚拟机

> 新建... /

> 创建自定虚拟机 /

> Linux / `Ubuntu 64位`

- 设置过程

| ID | 『虚拟机』设置 | 建议配置 | 默认值 | 说明 |

| :--: | :--------: | :--------------------: | :-----: | :----------: |

| 1 | 处理器 | - | 2 | 最低要求 |

| 2 | 内存 | - | 4096 MB | 节约内存 |

| 3 | 显示器 | 取消复选`加速 3D 图形` | 复选 | 节约内存 |

| 4.1 | 网络适配器1 | - | nat | 需上网 |

| 4.2 | 网络适配器2 | - | host only | 固定IP |

| 5 | 硬盘 | `40` GB | 20 GB | 保证练习容量 |

| 6 | 选择固件类型 | UEFI | 传统 BIOS | VMware Fusion 支持嵌套虚拟化 |

虚拟机

> 新建... /

> 创建自定虚拟机 /

> Linux / `Ubuntu 64位`

- 设置过程

| ID | 『虚拟机』设置 | 建议配置 | 默认值 | 说明 |

| :--: | :--------: | :--------------------: | :-----: | :----------: |

| 1 | 处理器 | - | 2 | 最低要求 |

| 2 | 内存 | - | 4096 MB | 节约内存 |

| 3 | 显示器 | 取消复选`加速 3D 图形` | 复选 | 节约内存 |

| 4.1 | 网络适配器1 | - | nat | 需上网 |

| 4.2 | 网络适配器2 | - | host only | 固定IP |

| 5 | 硬盘 | `40` GB | 20 GB | 保证练习容量 |

| 6 | 选择固件类型 | UEFI | 传统 BIOS | VMware Fusion 支持嵌套虚拟化 |

- 设置结果

| ID | HOSTNAME | CPU 核 | RAM | DISK | NIC |

| :--: | :------------------: | :----------: | :------------: | :-------: | :-----: |

| 1 | `k8s-master` | 2 或更多 | 4 GB或更多 | 40 GB | 1. nat

- 设置结果

| ID | HOSTNAME | CPU 核 | RAM | DISK | NIC |

| :--: | :------------------: | :----------: | :------------: | :-------: | :-----: |

| 1 | `k8s-master` | 2 或更多 | 4 GB或更多 | 40 GB | 1. nat **[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

if [ "$(lsb_release -cs)" = "jammy" ]; then

# Which services should be restarted?

export NFILE=/etc/needrestart/needrestart.conf

sudo sed -i $NFILE \

-e '/nrconf{restart}/{s+i+a+;s+#++}'

grep nrconf{restart} $NFILE

fi

```

### 6. [选做] 使用国内镜像仓库

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# C. 国内

if ! curl --connect-timeout 2 google.com &>/dev/null; then

if [ "$(uname -m)" = "aarch64" ]; then

# arm64

export MIRROR_URL=http://mirror.nju.edu.cn/ubuntu-ports

else

# x86

export MIRROR_URL=http://mirror.nju.edu.cn/ubuntu

fi

# 生成软件仓库源

export CODE_NAME=$(lsb_release -cs)

export COMPONENT="main restricted universe multiverse"

sudo tee /etc/apt/sources.list >/dev/null <<-EOF

deb $MIRROR_URL $CODE_NAME $COMPONENT

deb $MIRROR_URL $CODE_NAME-updates $COMPONENT

deb $MIRROR_URL $CODE_NAME-backports $COMPONENT

deb $MIRROR_URL $CODE_NAME-security $COMPONENT

EOF

fi

cat /etc/apt/sources.list

```

### 7. <必做> 设置静态 IP

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# 获取 IP

export NICP=$(ip a | awk '/inet / {print $2}' | grep -v ^127)

if [ "$(echo ${NICP} | wc -w)" != "1" ]; then

select IP1 in ${NICP}; do

break

done

else

export IP1=${NICP}

fi

```

```bash

# 获取网卡名 - 使用第2块网卡

export NICN=$(ip a | awk '/^3:/ {print $2}' | sed 's/://')

# 获取网关

export NICG=$(ip route | awk '/^default/ {print $3}')

# 获取 DNS

unset DNS; unset DNS1

for i in 114.114.114.114 8.8.4.4 8.8.8.8; do

if nc -w 2 -zn $i 53 &>/dev/null; then

export DNS1=$i

export DNS="$DNS, $DNS1"

fi

done

printf "

addresses: \e[1;34m${IP1}\e[0;0m

ethernets: \e[1;34m${NICN}\e[0;0m

routes: \e[1;34m${NICG}\e[0;0m

nameservers: \e[1;34m${DNS#, }\e[0;0m

"

```

```bash

# 更新 dns

sudo sed -i /etc/systemd/resolved.conf \

-e '/^DNS=/s/=.*/='"$(echo ${DNS#, } | sed 's/,//g')"'/'

sudo systemctl restart systemd-resolved.service

sudo ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf

# 更新网卡配置文件 - 第2块网卡 静态ip

export NYML=/etc/netplan/50-vagrant.yaml

sudo tee ${NYML} <

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

if [ "$(lsb_release -cs)" = "jammy" ]; then

# Which services should be restarted?

export NFILE=/etc/needrestart/needrestart.conf

sudo sed -i $NFILE \

-e '/nrconf{restart}/{s+i+a+;s+#++}'

grep nrconf{restart} $NFILE

fi

```

### 6. [选做] 使用国内镜像仓库

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# C. 国内

if ! curl --connect-timeout 2 google.com &>/dev/null; then

if [ "$(uname -m)" = "aarch64" ]; then

# arm64

export MIRROR_URL=http://mirror.nju.edu.cn/ubuntu-ports

else

# x86

export MIRROR_URL=http://mirror.nju.edu.cn/ubuntu

fi

# 生成软件仓库源

export CODE_NAME=$(lsb_release -cs)

export COMPONENT="main restricted universe multiverse"

sudo tee /etc/apt/sources.list >/dev/null <<-EOF

deb $MIRROR_URL $CODE_NAME $COMPONENT

deb $MIRROR_URL $CODE_NAME-updates $COMPONENT

deb $MIRROR_URL $CODE_NAME-backports $COMPONENT

deb $MIRROR_URL $CODE_NAME-security $COMPONENT

EOF

fi

cat /etc/apt/sources.list

```

### 7. <必做> 设置静态 IP

**[vagrant@k8s-master | k8s-worker1 | k8s-worker2]$**

```bash

# 获取 IP

export NICP=$(ip a | awk '/inet / {print $2}' | grep -v ^127)

if [ "$(echo ${NICP} | wc -w)" != "1" ]; then

select IP1 in ${NICP}; do

break

done

else

export IP1=${NICP}

fi

```

```bash

# 获取网卡名 - 使用第2块网卡

export NICN=$(ip a | awk '/^3:/ {print $2}' | sed 's/://')

# 获取网关

export NICG=$(ip route | awk '/^default/ {print $3}')

# 获取 DNS

unset DNS; unset DNS1

for i in 114.114.114.114 8.8.4.4 8.8.8.8; do

if nc -w 2 -zn $i 53 &>/dev/null; then

export DNS1=$i

export DNS="$DNS, $DNS1"

fi

done

printf "

addresses: \e[1;34m${IP1}\e[0;0m

ethernets: \e[1;34m${NICN}\e[0;0m

routes: \e[1;34m${NICG}\e[0;0m

nameservers: \e[1;34m${DNS#, }\e[0;0m

"

```

```bash

# 更新 dns

sudo sed -i /etc/systemd/resolved.conf \

-e '/^DNS=/s/=.*/='"$(echo ${DNS#, } | sed 's/,//g')"'/'

sudo systemctl restart systemd-resolved.service

sudo ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf

# 更新网卡配置文件 - 第2块网卡 静态ip

export NYML=/etc/netplan/50-vagrant.yaml

sudo tee ${NYML} <